Florida’s Ben Hill Griffin Stadium got a full-venue Wi-Fi network this fall. Credit all photos: Paul Kapustka, MSR (click on any picture for a larger image)

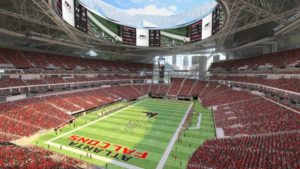

Though at Florida, like most SEC stadiums, the fans don’t really need any technology to fire them up, the addition of a NFL-caliber Wi-Fi network from Extreme Networks and Verizon was quickly embraced by fans in Gainesville, with one early contest against Auburn cresting the 11-terabyte mark for total data used. If nothing else, with the network in place a whole world outside of the stadium walls gets to see the arm-chomping craziness that makes Ben Hill Griffin Stadium one of the nation’s premier college football venues, thanks to social media and other apps that let fans share their game-day experience.

But beyond the obvious benefits for fans, the new network also brought new features to the Gators’ back-of-house business operations, including being able to connect a new point-of-sale system for concession stands, while also providing the potential for better digital engagement with the people in the stands. According to Florida athletic director Scott Stricklin, the addition of Wi-Fi has given Ben Hill Griffin Stadium somewhat of a new lease on life, with the ability of the new to augment the old.

“We had a lot of the inherent challenges of putting technology into a 90-year-old venue,” said Stricklin, “but it’s also interesting how technology allows you to work around those challenges.”

By partnering with stadium-application developer VenueNext, which has recently expanded its services to include a back-end POS system, Florida can now offer fans at home games a wide menu of digital-powered services, like the ability to order food and drinks ahead of time for pickup at express windows, and the ability to click on a phone to say “Water Me,” to have cold water delivered to the sunny-seat sections of the stadium.For the business of running the stadium, the new VenueNext POS system allowed Florida to shed its old cash-drawer system to one that can now provide a connected way to manage concession stands.

“The new app and POS technology makes concessions better for everyone, it’s faster for those who order ahead of time, and the lines are shorter,” Stricklin said. “It’s a fascinating idea that technology can extend the useful life of a facility.”

Scenes from a retrofit: drilling and conduits

Editor’s note: This profile is from our latest STADIUM TECH REPORT, which is available to read instantly online or as a free PDF download! Inside the issue are profiles of the new Wi-Fi deployment at the University of Oklahoma, as well as profiles of wireless deployments at Chase Center and Fiserv Forum! Start reading the issue now online or download a free copy!

Like many other big-bowl stadiums at the big foot- ball schools, Ben Hill Griffin Stadium is a work built over time, with different renovations and additions adding layers of concrete and seating in ways that definitely did not have wireless networking in mind. And like many other schools, Florida had a tough time historically making the case for the capital outlay needed to bring Wi-Fi to a venue that might only see six or seven days of use a year.

At his previous job as athletic director for Mississippi State, Stricklin was part of an SEC advisory board that just five years ago didn’t see stadium Wi-Fi as a priority. Fast forward to 2019, and both the world in general and Stricklin have changed their views.

“At one point, Wi-Fi was seen as a luxury item [for stadiums],” said Stricklin. “Now, connectivity is like running water or electricity. You’ve got to have it.”An RFP process for a full-stadium Wi-Fi network ended up getting Florida to choose a $6.3 million proposal from Verizon and Extreme, which have paired together for similar big-stadium deployments before, mainly at NFL venues like Green Bay’s Lambeau Field and Seattle’s CenturyLink Field.

“Obviously they’re experienced,” Stricklin said of the Extreme-Verizon pairing. “The success they’ve had before played a big role in increasing our comfort level.”

Like at those stadiums, at Florida Verizon has its own separate SSID for Verizon customers, who can be automatically connected to the Wi-Fi upon entering the stadium. Other guests can sign in for free via a portal screen.

According to Matt Vincent, Florida’s director of infrastructure operations, construction of the network actually started during the 2018 season, with small-section roll-outs of the service. Over this past summer, however, the heavy lifting went in, with Extreme finishing the full-stadium design with 1,478 APs, all Wave2 802.11ac. Of that number, approximately 1,200 are in the main seating bowl, with most of those located in under-seat enclosures.

Unlike some other under-seat deployments where a separate core drill was done for each AP location, at Florida Extreme was able to reduce the number of concrete holes needed with some ingenious use of conduit and metal plating. In many areas of the stands, one hole through the concrete supports a number of APs, with conduit and metal plating covering the connections under and behind the seats and benches.

If you know what you are looking for, when you wander the maze of concourses under the seating sections you can spot a new network of conduit pipes that bring the cabling from the network out to the seats. In concourses, clubs and other areas with overhead mounting places, Extreme also used omni-directional antennas and other typical indoor equipment for coverage.Getting to 11+ TB with fast, consistent coverage

At Florida’s biggest home game of the year, an Oct. 5 game against Auburn (a 24-13 Florida victory), Gator fans used 11.82 terabytes of data on the Wi-Fi network, one of the top totals ever seen at a college venue. Mobile Sports Report visited the stadium for a Nov. 9 game against Vanderbilt, and found strong performance on the network stadium-wide, including in harder-to-cover areas like concourses and narrow seating areas under overhangs.

Starting our testing in the club-seating lounge area, MSR got a Wi-Fi speedtest of 68.6 Mbps on the download and 65.2 Mbps on the upload, in front of a stuffed alligator with a very toothy grin. Heading down to the main entry gate, we got a mark of 50.2 Mbps / 61.8 Mbps as fans were streaming in just ahead of game time.

In a somewhat enclosed seating area on the lower level behind one end zone, we got a test of 21.9 Mbps / 29.9 Mbps just as the Gator mascots took the field for pregame activity. Moving up into the upper deck of section 43, we got a mark of 47.8 Mbps / 50.9 Mbps just after kickoff. Heading under the upper deck stands to the top concourse we got a mark of 43.6 Mbps / 55.5 Mbps, while drawing puzzled stares from fans wondering why we were taking pictures of the metal tubes running up the back of the seating floor.

Stopping under the other end zone for a Gatorade break, we sat at some loge-type seats and got a mark of 61.2 Mbps / 64.4 Mbps. Then moving back out into the stands we got our up-close picture of the sign welcoming us to “The Swamp,” where we got a speedtest of 54.0 Mbps / 53.8 Mbps.An island of connectivity among the crowds

While Florida’s Vincent spends most of his game days working to assure the network keeps running at top performance, he said that stadium staff enjoys the Wi-Fi as well.

“It’s been just a night and day difference for everyone since we added the Wi-Fi,” Vincent said, noting a tweet from a fan during the Auburn game that said “the only place in town his phone could connect was at the stadium.”

While saying the decision to deploy Wi-Fi was still somewhat of a leap of faith, Stricklin gave credit to the Florida staff members who prepared the reports outlining the benefits such a system could bring to the venue, for both the fans as well as for the school.

“It’s a little like Indiana Jones when he steps out [over the cliff] and doesn’t see the floor below him,” Stricklin said. “It’s an intense capital outlay [to build a Wi-Fi network] and I don’t know that we’ll ever see it all returned in terms of numbers. But again, I thank the staff and partners like VenueNext who all had a great vision of what a connected stadium could do. With mobile ticketing, you have the opportunity to learn who’s in your stadium. There are concrete ways we can use connectivity to engage, learn about fans, and keep up with them. It’s a wise investment.”

The white dots are under-seat APs

Wi-Fi coverage was good throughout ‘The Swamp’

Conduit carrying cables to under-seat APs proceeds in an orderly fashion up the underside of the stands