With almost all work now being done remotely, it’s no surprise that team and venue IT staffs have virtual operations support at the forefront as the coronavirus shuts down most business operations.

In emails and calls to a small group of venue, team and school IT leaders the task of making sure that staffs could work online in a virtual fashion was the one common response from every person who replied to our questions. According to our short list of respondents that task included getting mobile devices into the hands of those who needed them, and setting up systems like virtual private networks (VPNs) and virtual desktop environments (VDI) so that work could proceed in an orderly, secure fashion.

Since many of the people we asked for comments couldn’t reply publicly, we are going to keep all replies anonymous and surface the information only. The other main question we asked was whether or not the virus shutdowns had either delayed or accelerated any construction or other deployment projects; we got a mix of replies in both directions, as some venues are taking advantage of the shutdowns to get inside arenas that don’t have any events happening now. In addition to some wireless-tech projects that are proceeding apace, we also heard about other repairs to systems like elevators and escalators, which are more easily done when venues are empty.

But we also heard from some venues that shutdowns right now will likely push some projects back, maybe even a year or more. One venue that is largely empty in the summer will have to skip a planned network upgrade because it expects that normally empty dates in the fall and winter will be filled by cancelled events that will need to be rescheduled. Another venue said that it has projects lined up ready to go, but has not yet gotten budget approval to proceed.

Following our editorial from earlier this week, when we encouraged venues to make their spaces available for coronavirus response efforts, it was clear that many venues across the world had already started down that path. One of the quickest uses to surface was using venues’ wide-open parking lots as staging areas for mobile coronavirus testing; Miami’s Hard Rock Stadium, Tampa’s Raymond James Stadium and Washington D.C.’s FedEx Field were among those with testing systems put in parking lots.

Some venues have already been tabbed as places for temporary hospitals, with deployments at Seattle’s CenturyLink Field and New York’s Billie Jean King National Tennis Center already underway. Other venues, including Rocket Mortgage Fieldhouse in Cleveland and State Farm Stadium in Glendale, Ariz., have hosted blood drives.

Using venues to support coronavirus response efforts is a worldwide trend, with former Olympic venues in London being proposed as support sites, as well as former World Cup venues in Brazil. Perth Stadium in Australia is also being used, as a public safety command center, like Chicago’s United Center, which is being used as a logistics hub.

Many other venues are stepping forward to offer free public Wi-Fi access in parking lots so that people who don’t have internet access at home can safely drive up and connect. Ball State University and the Jackson Hole Fairgrounds are just two of many venues doing this.

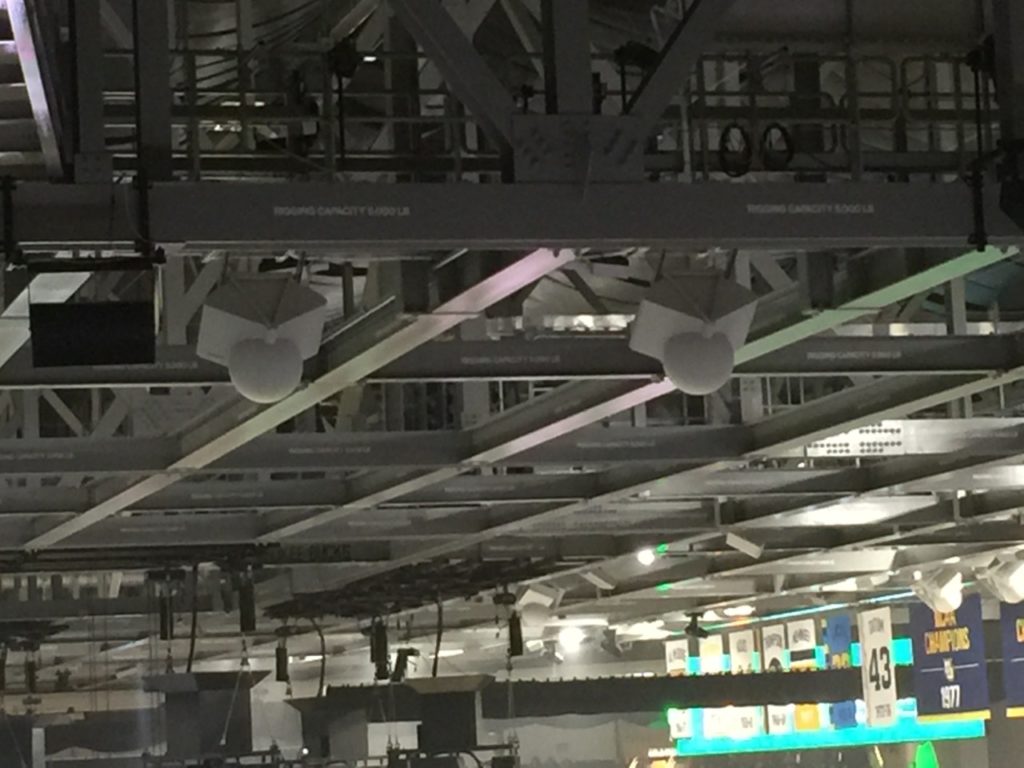

Venues are also offering their extensive kitchen and food-storage capabilities for the response effort. The Green Bay Packers have been preparing and delivering meals for schools and health-care workers, while the Pepsi Center in Denver offered cooler space to store food. Many other venues have contributed existing stores of food to charitable organizations and support efforts, since those items won’t be used at any of the many cancelled events.