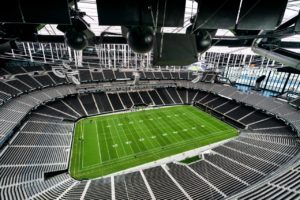

Allegiant Stadium reflecting the evening sky in Las Vegas. Credit: Matt Aguirre/Las Vegas Raiders (click on any photo for a larger image)

As befits its futuristic appearance, the new home of the newly named Las Vegas Raiders is also fitted with the latest in fan-facing technologies, deployments that will have to wait a bit before their potential can be realized.

Though the $1.9-billion, 65,000-seat stadium “officially” opened on Sept. 21 with a 34-24 Raiders victory over the New Orleans Saints, a decision made by the team earlier in the year meant that no fans were on hand to witness the occasion. But when fans are allowed to enter the building, they will be treated to what should be among the best game-day technical experiences anywhere, as a combination of innovation and expertise has permeated the venue’s deployments of wireless and video technologies.

With a Wi-Fi 6 network using equipment from Cisco, and an extensive cellular distributed antenna system (DAS) deployment by DAS Group Professionals using gear from JMA Wireless and MatSing, integrated fiber, copper and cable infrastructure from CommScope, backbone services from Cox Business/Hospitality Network, digital displays from Samsung, and design and converged network planning directed by AmpThink, the Raiders have used an all-star team of partners to reach the organization’s desire to provide what Raiders’ vice president of IT Matt Pasco calls “a top-notch fan experience.”

Finally getting to build a stadium network

Editor’s note: This story is from our recent STADIUM TECH REPORT Fall 2020 issue, which you can read right now, no email or registration required! Also in this issue is a profile of the technology behind SoFi Stadium in Los Angeles. START READING NOW!

For Pasco, who is in his 19th year with the Silver and Black, the entity that became Allegiant Stadium was the realization of something he’d never had: A stadium network to call his own.

From 1995 until the end of last season, the then-Oakland Raiders played home games in the Oakland Alameda County Coliseum, where they were tenants and shared the building with MLB’s Oakland Athletics.

According to Pasco, since the Raiders didn’t have the ability to direct capital improvements, “we never got to build a sufficient DAS, and we never got to have sufficient Wi-Fi for all our fans.”

Fast-forward to the plans that eventually took shape with the move of the team to Las Vegas, and for a change Pasco was able to start thinking about what that meant from a technology perspective. With his long tenure and relationships around the league, Pasco said he embarked on a several years-long “stadiums tour” of accompanying the team for road games, looking at what other teams had done at their venues.“I kept a big notebook on what I liked, and what didn’t seem to work,” Pasco said. “I sat down with a lot of my counterparts and talked about what worked well, and what they had to spend time with. So I got a really good sense of what was possible.”

Wi-Fi 6 arrives just in time

One fortunate event for Allegiant Stadium’s wireless deployment was the 2019 arrival of equipment that supported the new Wi-Fi 6 standard, also known as 802.11ax. With its ability to support more connections, higher bandwidth and better power consumption for devices, Wi-Fi 6 is a great technology to start off with, Pasco said.

“We were very fortunate that Wi-Fi 6 was released just in time [to be deployed at Allegiant Stadium],” Pasco said. “The strength of 802.11ax will pay off in a highly dense stadium with big crowds.”

The Raiders’ choice of Cisco as a Wi-Fi provider wasn’t a complete given, even though Pasco said that the team has long been “a Cisco shop” for not just Wi-Fi but for core networking, IPTV and phones.

“We did look at Extreme [for the new build] but Cisco just has so many pieces of the stack,” Pasco said. “Things like inconsistencies between switch maker A and IPTV vendor B are a little less likely to happen. And they’ve done good things in so many buildings.”

Pasco also praised the Wi-Fi network design and deployment skills of technology integrator AmpThink, which used an under-seat deployment design for Wi-Fi APs in the main seating bowl. Overall, there are approximately 1,700 APs total throughout the venue.

“I am thrilled with the work AmpThink has done,” said Pasco, who admitted that as a network engineer, he had “never done” a full stadium design before.

“They [AmpThink] have built networks at more than 70 venues, so they came highly recommended,” Pasco said. “And meeting their leadership early on sold me pretty quickly.”

Part of what AmpThink brought to the stadium was a converged network design, where every connected device is part of the same network.

Innovation abounds in the DAS

If the Wi-Fi world is already moving forward with general availability of Wi-Fi 6 gear, the cellular side of the equation is in a much different place as carriers contemplate how to best move forward with their latest standard, 5G.

For venues currently adding or upgrading a DAS, the 5G question looms large. One of the hardest things about planning for 5G is that the main U.S. cellular carriers will all have different spectrum bands in use, making it hard to deploy a single “neutral host” DAS to support all the providers. Currently, all previous 5G deployments in stadiums have been single-carrier builds – but that won’t be the case in Allegiant Stadium, thanks to some new gear from JMA.

Steve Dutto, president of DGP – which used JMA gear at many of its other stadium DAS installations, including the San Francisco 49ers’ Levi’s Stadium and most recently at the Texas Rangers’ new home, Globe Life Field – said the DAS deployed at Allegiant Stadium “is like no other” NFL-venue cellular network.“By selecting JMA DAS equipment we were able to deploy a [5G standard] NR radio capable system from day one,” Dutto said. “This means that all carriers can deploy 5G low- and mid-band technology without any additional cost or changes to the DAS system.”

According to Dutto and JMA, the JMA TEKO DAS antennas cover all major licensed spectrum used, from 600MHz to 2500 MHz. and will provide ubiquitous coverage over the entire stadium.

“Carriers will be able to deploy their 4G technologies along with their low- and mid-band 5G technology over all of the stadium coverage area,” Dutto said. “No upgrades will be required. All carriers will need to do is provide their base radios in the headend.”

According to Todd Landry, corporate vice president, product and market strategy at JMA, the TEKO gear used at Allegiant Stadium “employs an industry unique nine-band split architecture, placing lower frequency bands in different radios than higher frequency bands.” This approach, Landry said, lets the stadium “optimize the density of higher band cells versus lower bands” while also reducing the total number of radios needed for low bands by half.

Landry said the JMA gear also has integrated support for the public safety FirstNet spectrum band, and is software programmable, allowing venue staff to “turn on” capabilities per carrier as needed, eliminating on-site visits to install additional radios or radio modules. According to DGP the DAS has 75 high-band zones and 44 low-band zones in the main seating bowl, with a total of 117 high- band and 86 low-band zones throughout the venue.

Adding in the MatSing antennas from above

One twist in the DAS buildout was the addition of 30 MatSing lens antennas to the cellular mix, a technology solution to potential coverage issues in some hard-to-reach areas of the seating bowl. According to Pasco the Raiders were trying to solve for a typical DAS issue – namely, how to best cover the premium seats closest to the field, which are the hardest to reach with a traditional top-down DAS antenna deployment.

“We looked at a hybrid approach, to use under-seat [DAS] antennas for the first 15 or 20 rows, but the cost was astronomical,” Pasco said. “We also heard that [an underseat deployment] may not have performed as well as we wanted.”

The roof structure provided a perfect mounting place for the MatSing Lens antennas. Credit: Matt Aguirre/Las Vegas Raiders

“I had heard about the full MatSing deployment at Amalie [Arena] and wondered if we could do that,” said Pasco. Fortunately for the Raiders, the architecture of the stadium, with a high ring supporting the transparent roof, turned out to be a perfect place to mount MatSing antennas, which use line-of-sight transmission to precisely target broadcast areas. For Pasco, a move toward more MatSings was a triple-play win, since it removed the need for other antennas from walkways and overhangs, was less costly than an under-seat network, and should prove to have better performance, if network models are correct.

“It is the perfect marriage of cost reduction, better performance and aesthetics,” said Pasco of the MatSing deployment. “We even painted them black, like little Black Holes,” Pasco said. “It’s one of the most innovative decisions we made.”

“We are excited to be part of the Allegiant Stadium network,” said MatSing CEO Bo Larsson. “It is a great venue to show the capability of MatSing Lens antennas.”

Supporting wireless takes a lot of wires

Behind all the wireless antenna technology in Allegiant Stadium – as well as behind the IPTV, security cameras and other communications needs – sits some 227 miles of optical fiber and another 1.5 million feet of copper cable, provided by CommScope.

A good balance of the 100 Gbps fiber connections are used to support the stadium’s DAS network, with the capacity built not just for current needs but for expected future demands as well. According to CommScope senior field applications engineer Greg Hinders the “spider web of single-mode fiber” includes multiple runs of 864-strand links, which break out in all directions to support all the networking needs.

For the cable connections to the Wi-Fi gear, CommScope’s design went with Cat 6A cable, which has double the capabilities and a longer reach than standard Cat 6.

“The new APs really require it [Cat 6A] so we went standard with Cat 6A throughout the building,” Hinders said.

And if the job wasn’t tough enough – limited construction space at the stadium required that CommScope and its distribution partner Anixter had to stage its network in an offsite warehouse – CommScope also had to make sure that exposed wiring was colored silver and black to match the stadium design and the team’s colors.While single-mode fiber is usually colored yellow, Hinders is enough of a football fan to know that Pittsburgh Steelers colors wouldn’t fly in the Raiders’ home.

“It all had to be black and grey,” said Hinders. “Black and yellow isn’t good for the Raiders.”

The MatSing antennas also posed a challenge for CommScope, since each MatSing antenna requires 48 individual connections for all the radios in each device.

“It was [another] logistical challenge,” said Hinders. “But it was great to see [the entire network] all come to fruition. It’s nice to know we had a part in putting it all together.”

Providing enough backbone bandwidth

To ensure that Allegiant Stadium had enough backbone bandwidth to support all its communications needs, the Raiders turned to Cox Business/Hospitality Network, a partner with considerable telecom assets in the Las Vegas area.

Jady West, vice president of hospitality for Cox, noted that not only does Cox have experience in providing data services to high-demand venues (including State Farm Stadium in Glendale, Ariz., which routinely hosts big college games and the Super Bowl), it also has a wealth of resources in and around Las Vegas, providing services to the big casino hotels and the Las Vegas Convention Center.

With a 100 Gbps regional network, West said Cox is able to bring “quite a bit of power and flexibility” to the equation. Having supplied services to big events like CES at the LVCC, West said, “takes a specialized skill, and that’s what my team does. This is what we do.”

Specifically for the Raiders, Cox built two 40-Gbps redundant pipes just to serve the needs of Allegiant Stadium. Additionally, Cox built a 10 Gbps metro Ethernet link between the stadium and the team’s headquarters and practice facility in nearby Henderson, Nev., a connection that Pasco said would be essential for stadium operations as well as the on-field football business.“Both the video production staff and the football staff can now push information back and forth like we’re in the same building,” said Pasco, who gave high praise to Cox’s work. From a production side, crews at headquarters can create content for videoboards and displays, and have it at the stadium instantaneously; similarly, video from the stadium’s field of play or from practices can be shared back and forth as needed, in a private and real-time fashion.

West said Cox, which also provides game-day network support and a “NOC as a service” solution, knows that the data demands of the big-time events that will likely be held at Allegiant Stadium will only keep increasing, and it built its systems to support that growth.

“The most demanding events are things like CES, and NFL games,” West said. “This network is built for the future, to hold up for all those events.”

Videoboards to fit the design

Last but certainly not least in the technology arsenal at Allegiant Stadium are the Samsung videoboards. Above the south end zone, the largest board measures approximately 250 feet long by 49 feet high, according to Pasco. Two identical sized boards of 49 feet by 122 feet are in the corners of the north end zone. Including ribbon boards, Pasco said there is a total of 40,000 square feet of LED lights inside the seating bowl.

On the stadium’s exterior there is another large videoboard, a 275-foot mesh LED screen that fits in perfectly with the bright lights of the city’s famed Strip. Inside, the venue will also have approximately 2,400 TV screens for information and concessions, with all the systems controlled by the Cisco Vision display management system.