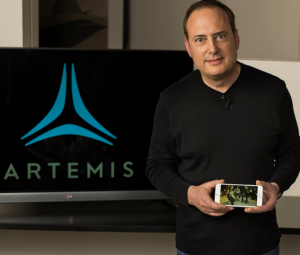

In the meantime, owners of large public venues (like sports stadiums) can now test out the Artemis technology for themselves, by testing an Artemis I Hub and antenna combination in a trial arrangement with the company. Announced last year, Artemis’ pCell technology claims to solve two of the biggest problems in wireless networking, namely bandwidth congestion and antenna interference, by turning much of the current technology thinking on its head. If the revolutionary networking idea from longtime entrepreneur Steve Perlman pans out, stadium networks in particular could become more robust while also being cheaper and easier to deploy.

In a phone interview with Mobile Sports Report prior to Tuesday’s announcement, Perlman said Artemis expects to get FCC approval for its pCell-based wireless service sometime in the next 6 months. When that happens, Artemis will announce pricing for its cellular service, which will work with most existing LTE phones by adding in a SIM card provided by Artemis. Phones with dynamic SIMs like some of the newer devices from Apple, Perlman said, will be able to simply choose the Artemis service without having to add in a card.

Though he wouldn’t announce pricing yet, Perlman said Artemis services would be less expensive than current cellular plans. He said that there will likely be an option for local San Francisco service only, and another that includes roaming ability on other providers’ cellular networks for use outside the city.

More proof behind the yet-untasted pudding

When Perlman, the inventor of QuickTime and WebTV, announced Artemis and its pCell technology last year, it was met with both excitement — for its promise of delivering faster, cheaper wireless services — and no shortage of skepticism, about whether it would ever become a viable commercial product. Though pCell’s projected promise of using cellular interference to produce targeted, powerful cellular connectivity could be a boon to builders of large public-venue networks like those found in sports stadiums, owners and operators of those venues are loath to build expensive networks on untested, unproven technology. So it’s perhaps no surprise that Artemis has yet to name a paying customer for its revolutionary networking gear.

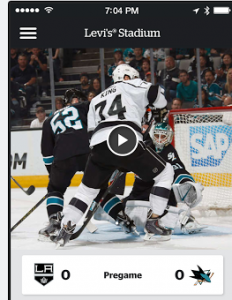

But being able to name names and talk about spectrum deals are steps bringing Artemis closer to something people can try, and perhaps buy. VenueNext, the application development firm behind the San Francisco 49ers’ Levi’s Stadium app, confirmed that it is testing Artemis technology, and the San Francisco network will provide Perlman and Artemis with a “beta” type platform to test and “shake out the system” in a live production environment.“We need to be able to move quickly, get feedback and test the network,” said Perlman about Artemis’ decision to run its own network first, instead of waiting for a larger operator to implement it. “We need to be able to move at startup speed.”

For stadium owners and operators, the more interesting part of Tuesday’s news may be the Artemis I Hub, a device that supports up to 32 antennas, indoor for now with outdoor units due later this year. The trial testing will allow venue owners and operators to kick the tires on pCell deployment and performance on their own, instead of just taking Artemis’ word for it. Artemis also has published a lengthy white paper that fleshes out the explanation of their somewhat radical approach to cellular connectivity, another step toward legitimacy since publishing such a document publicly means that Artemis is confident of its claims.

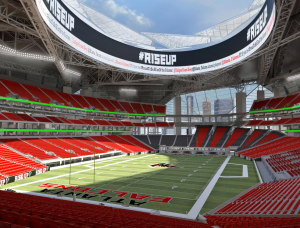

If networking statistics from recent “big” stadium events are any barometer, the field of stadium networking may need some significant help soon since fans are lately using way more data than ever before, including the 13+ Gigabytes of traffic at the Super Bowl in Phoenix and the 6+ GB figure from the college football playoff championship game. To Perlman, the idea of trying to use current Wi-Fi and cellular technology to address a crowded space doesn’t make sense.

“You simply cannot use interfering technology in a situation where you have closely packed transmitters,” said Perlman. “You just can’t do it.”

Artemis explained

If you’re unfamiliar with the Artemis idea, at its simplest level it’s a new idea in connecting wireless devices to antennas that — if it works as advertised — turns conventional cellular and Wi-Fi thinking on its head. What Perlman and Artemis claim is that they have developed a way to build radios that transmit signals “that deliberately interfere with each other” to establish a “personal cell,” or pCell, for each device connecting to the network.(See this BusinessWeek story from 2011 that thoroughly explains the Artemis premise in detail. This EE Times article also has more details, and this Wired article is also a helpful read.)

Leaving the complicated math and physics to the side for now, if Artemis’ claims hold true their technology could solve two of the biggest problems in wireless networking, namely bandwidth congestion and antenna interference. In current cellular and Wi-Fi designs, devices share signals from antenna radios, meaning bandwidth is reduced as more people connect to a cellular antenna or a Wi-Fi access point. Adding more antennas is one way to solve congestion problems; but especially in stadiums and other large public venues, you can’t place antennas too close to each other, because of signal interference.

The Artemis pCell technology, Perlman said, trumps both problems by delivering a centimeter-sized cell of coverage to each device, which can follow the device as it moves around in an antenna’s coverage zone. Again, if the company’s claims hold true of being able to deliver full bandwidth to each device “no matter how many users” are connected to each antenna, stadium networks could theoretically support much higher levels of connectivity at possibly a fraction of the current cost.

The next step in Artemis’ evolution will be to see if (or how well) its technology works in the wild, where everyday users can subject it to the unplanned stresses that can’t be tested in the lab. With any luck and FCC willing, we won’t have to wait another year for the next chapter to unfold.