AT&T Stadium before the college football playoff championship game. (Click on any photo for larger image) Credit all photos: Paul Kapustka, MSR

John Winborn, chief information officer for the Dallas Cowboys, said the AT&T-hosted Wi-Fi network at AT&T Stadium carried 4.93 TB of traffic during Monday’s game between Ohio State and Oregon, a far higher total than we’ve ever heard of before for a single-game, single-venue event. AT&T cellular customers, Winborn said, used an additional 1.41 TB of wireless data on the stadium DAS network, resulting in a measured total of 6.34 TB of traffic. The real total is likely another terabyte or two higher, since these figures don’t include any traffic from other carriers (Verizon, Sprint, T-Mobile) carried on the AT&T-neutral host DAS. (Other carrier reps, please feel free to send us your data totals as well!)

The national championship numbers blew away the data traffic totals from last year’s Super Bowl, and also eclipsed the previous high-water Wi-Fi mark we knew of, the 3.3 TB number set by the San Francisco 49ers during the opening game of the season at their new Levi’s Stadium facility. Since we’ve not heard of any other event even coming close, we’ll crown AT&T Stadium and the college playoff championship as the new top dog in the wireless-data consumption arena, at least for now.

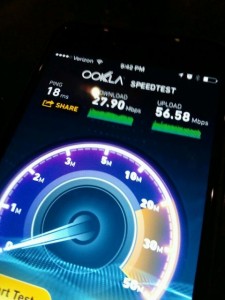

Coincidentally, MSR on Tuesday was touring the University of Phoenix Stadium and the surrounding Westgate entertainment district, which is in the process of getting the final touches on a new complex-wide DAS installed by Crown Castle. The new DAS includes antennas on buildings and railings around the restaurants and shops of the mall-like Westgate complex, as well as inside and outside the UoP Stadium. (We’ll have a full report soon on the new DAS installs, including antennas behind fake air-vent fans on the outside of the football stadium to help handle pre-game crowds).The University of Phoenix Stadium also had its entire Wi-Fi network ripped and replaced this season, in order to better serve the wireless appetites coming for the big game on Feb. 1. At AT&T Stadium on Monday we learned that the network there had almost 300 new Wi-Fi access points and a number of new DAS antennas installed since Thanksgiving, in anticipation of a big traffic event Monday night. Our exclusive on-the-scene tests of the Wi-Fi and DAS network found no glitches or holes in coverage, which is probably part of the reason why so many people used so much data.

UPDATE: Here is the official press release from AT&T, which basically says the same thing our post does.